Multimodal LLMs

With OpenAI's release of their GPT-4 Vision model, it has opened up the ability to analyze visual content (images and videos) and gather insights, which was previously not possible with existing Large Language Models (LLMs).

Large multimodal models (LMMs) combine information from different modalities, such as text and images, to understand and generate content. These models have the ability to process and generate information across multiple types of data, providing a more comprehensive understanding of the input.

Walkthrough

Let's walkthrough how to build an image analysis pipeline and AI copilot for insurance companies with Graphlit, with just a few API calls, and no AI experience required.

Completing this tutorial requires a Graphlit account, and if you don't have a Graphlit account already, you can signup here for our free tier. The GraphQL API requires JWT authentication, and the creation of a Graphlit project.

Analyzing a Chicago Building Fire

Back in 2017, when living in Chicago, a fire broke out in a furniture company across the street where where I lived.

I had taken pictures of the quick response of the Chicago Fire Department, which saved the buildings next door. (The furniture company building was a total loss, and ended up being torn down.)

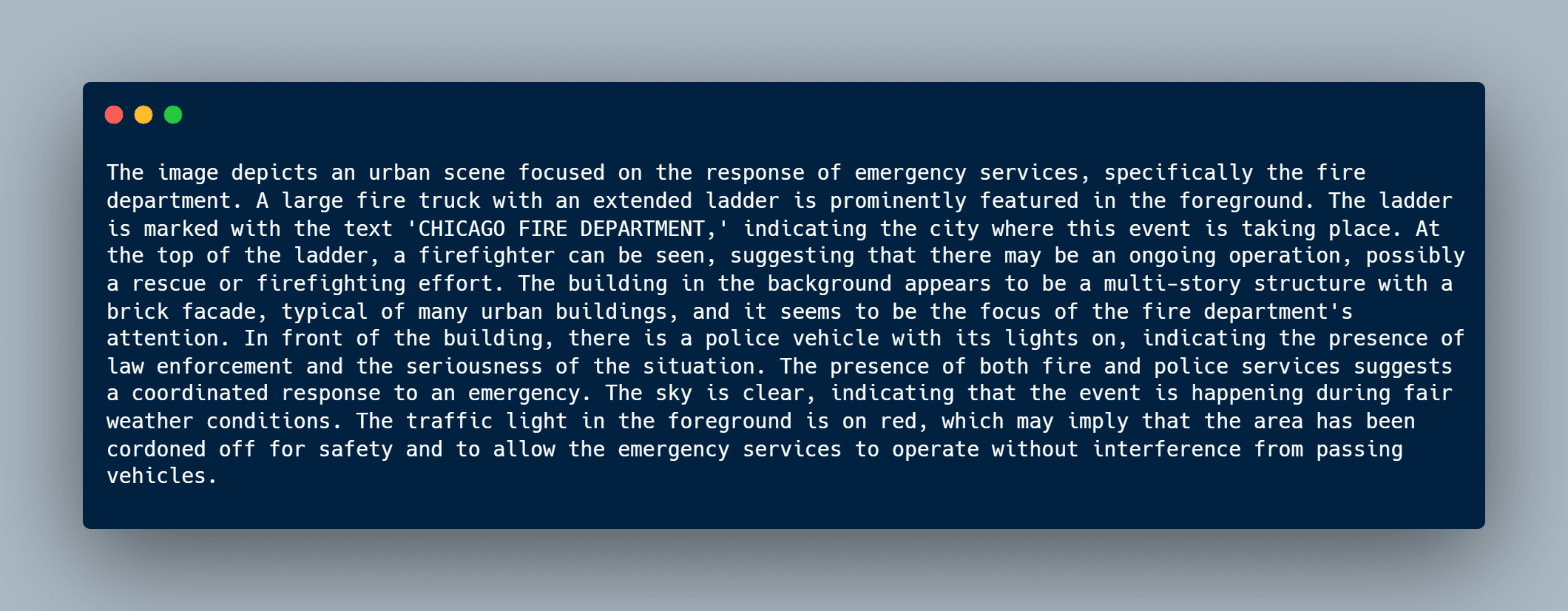

We can use the GPT-4 Vision model to provide a detailed description of images, like this example. Notice how it recognized the text "Chicago Fire Department" from the ladder, as well as recognizing the police vehicle.

Existing computer vision models can do OCR (Optical Character Recognition) or generate captions, but the ability to prompt an LLMs and get a response with this fidelity is game-changing for the AI industry.

Insurance Adjustment

After a fire, insurance adjusters have to process the damage and evaluate claims. This can be a valuable use case for the insights provided by the GPT-4 Vision model.

Insurance adjustment refers to the process of evaluating and settling insurance claims.

Insurance adjusters play a crucial role in assessing and processing insurance claims, including those related to fires. The use of images in their job can be beneficial in several ways:

- Damage Assessment: Images provide visual documentation of the extent of damage caused by the fire. Adjusters can use these images to assess the severity of structural damage, damage to personal belongings, and other affected areas.

- Evidence and Documentation: Images serve as valuable evidence in the claims process. They help document the condition of the property before and after the incident, providing a clear visual record that can be used to validate the claim and determine the appropriate compensation.

- Claim Validation: Images can help validate the legitimacy of a claim. Adjusters can compare the information provided in the claim with the visual evidence to ensure that the reported damages align with the actual conditions.

- Loss Estimation: Adjusters can use images to estimate the monetary value of the loss. Visual evidence helps in calculating the cost of repairing or replacing damaged property and possessions.

- Investigation and Analysis: Adjusters may need to investigate the cause of the fire. Images can aid in the analysis of fire patterns, origin, and potential contributing factors, helping to determine liability and prevent fraud.

- Claim Settlement Negotiations: During negotiations, images can be powerful tools to support the adjuster's position. They provide a visual representation of the damages, making it easier to convey the impact of the incident.

Automated Image Insights

Using Graphlit, we can ingest these images of the Chicago building fire from an Azure blob storage container.

First, we create a workflow object, which provides the instructions to extract labels, description and visible text from the images.

Note, we are assigning the LOW detail mode for the OpenAI GPT-4 Vision model. This allows the API to return faster responses and consume fewer input tokens for use cases that do not require high detail.

// Mutation:

mutation CreateWorkflow($workflow: WorkflowInput!) {

createWorkflow(workflow: $workflow) {

id

name

state

extraction {

jobs {

connector {

type

contentTypes

fileTypes

extractedTypes

openAIImage {

confidenceThreshold

detailMode

}

}

}

}

}

}

// Variables:

{

"workflow": {

"extraction": {

"jobs": [

{

"connector": {

"type": "OPEN_AI_IMAGE",

"openAIImage": {

"detailMode": "LOW",

"confidenceThreshold": 0.8

},

"extractedTypes": [

"LABEL"

]

}

}

]

},

"name": "GPT-4 Vision Workflow"

}

}

// Response:

{

"extraction": {

"jobs": [

{

"connector": {

"type": "OPEN_AI_IMAGE",

"openAIImage": {

"detailMode": "LOW",

"confidenceThreshold": 0.8

},

"extractedTypes": [

"LABEL"

]

}

}

]

},

"id": "9058d763-a6be-4c21-8364-67a6ad527f4b",

"name": "GPT-4 Vision Workflow",

"state": "ENABLED"

}

Now that we have the GPT-4 Vision Workflow object, we create a feed to read all the images from the "test" container, and "images/Chicago" relative path.

We specify the workflow by ID, which will be used to process these ingested images.

// Mutation:

mutation CreateFeed($feed: FeedInput!) {

createFeed(feed: $feed) {

id

name

state

type

}

}

// Variables:

{

"feed": {

"type": "SITE",

"site": {

"type": "AZURE_BLOB",

"azureBlob": {

"storageAccessKey": "redacted",

"accountName": "redacted",

"containerName": "test",

"prefix": "images/Chicago/"

}

},

"workflow": {

"id": "9058d763-a6be-4c21-8364-67a6ad527f4b"

},

"schedulePolicy": {

"recurrenceType": "ONCE"

},

"name": "Chicago Fire Photos"

}

}

// Response:

{

"type": "SITE",

"id": "77cbafeb-baa9-4002-ad2b-bfb96a7990e3",

"name": "Chicago Fire Photos",

"state": "ENABLED"

}

After the feed is created, Graphlit enumerates all files from the specified location on Azure blob storage, and automatically ingests them, while applying the specified content workflow. This happens asynchronously, and takes a minute or two to complete, depending on the available rate limits for the GPT-4 Vision model.

Now that we have these images in the Graphlit knowledge graph, we can start emulating an insurance adjuster, and gather insights from the extracted data.

Multimodal RAG

Similar to how LLMs can be used to analyze documents and web pages, here we are showing an example of Multimodal Retrieval Augmented Generation (RAG).

We are using the OpenAI GPT-4 Vision model, but this pattern can be used with any Multimodal LLM which provides an API for image analysis.

To begin our analysis, we create an LLM specification, which describes which model to use (GPT4_TURBO_128K_PREVIEW), and the LLM temperature, probability and completionTokenLimit.

Specifications act as a preset for interacting with the LLM, and can be reused across conversations. Developers can also assign a default specification to the Graphlit project, which is used for all conversations.

// Mutation:

mutation CreateSpecification($specification: SpecificationInput!) {

createSpecification(specification: $specification) {

id

name

state

type

serviceType

}

}

// Variables:

{

"specification": {

"type": "COMPLETION",

"serviceType": "OPEN_AI",

"searchType": "VECTOR",

"openAI": {

"model": "GPT4_TURBO_128K_PREVIEW",

"temperature": 0.1,

"probability": 0.2,

"completionTokenLimit": 512

},

"name": "GPT-4 128K Preview"

}

}

// Response:

{

"type": "COMPLETION",

"serviceType": "OPEN_AI",

"id": "be176ad2-6062-4499-a857-aa3b4bba047e",

"name": "GPT-4 128K Preview",

"state": "ENABLED"

}

Then, we create the conversation, and assign the specification object to use.

// Mutation:

mutation CreateConversation($conversation: ConversationInput!) {

createConversation(conversation: $conversation) {

id

name

state

type

}

}

// Variables:

{

"conversation": {

"type": "CONTENT",

"specification": {

"id": "be176ad2-6062-4499-a857-aa3b4bba047e"

},

"name": "GPT-4 128K Preview"

}

}

// Response:

{

"type": "CONTENT",

"id": "ca05660d-4de8-47fb-a7b4-13a28bd649cb",

"name": "GPT-4 128K Preview",

"state": "OPENED"

}

Now we have our conversation, which we can prompt multiple times.

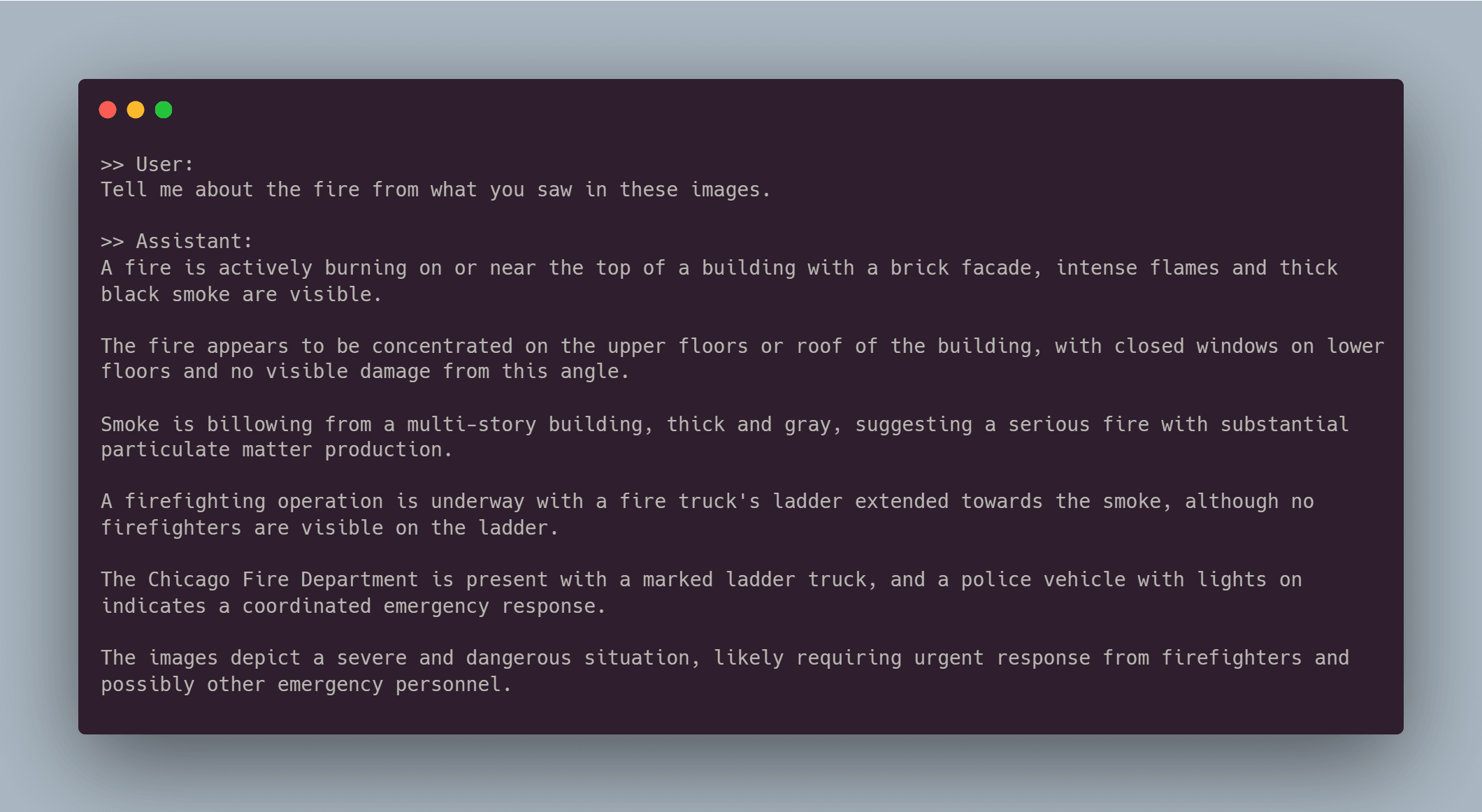

Let's find out what GPT-4 Vision recognizes in the images, and pass the prompt:

Tell me about the fire from what you saw in these images.

// Mutation:

mutation PromptConversation($prompt: String!, $id: ID) {

promptConversation(prompt: $prompt, id: $id) {

conversation {

id

}

message {

role

author

message

tokens

completionTime

timestamp

}

messageCount

}

}

// Variables:

{

"prompt": "Tell me about the fire from what you saw in these images.",

"id": "ca05660d-4de8-47fb-a7b4-13a28bd649cb"

}

// Response:

{

"conversation": {

"id": "ca05660d-4de8-47fb-a7b4-13a28bd649cb"

},

"message": {

"role": "ASSISTANT",

"message": "A fire is actively burning on or near the top of a building with a brick facade, intense flames and thick black smoke are visible.\n\nThe fire appears to be concentrated on the upper floors or roof of the building, with closed windows on lower floors and no visible damage from this angle.\n\nSmoke is billowing from a multi-story building, thick and gray, suggesting a serious fire with substantial particulate matter production.\n\nA firefighting operation is underway with a fire truck's ladder extended towards the smoke, although no firefighters are visible on the ladder.\n\nThe Chicago Fire Department is present with a marked ladder truck, and a police vehicle with lights on indicates a coordinated emergency response.\n\nThe images depict a severe and dangerous situation, likely requiring urgent response from firefighters and possibly other emergency personnel.",

"tokens": 221,

"completionTime": "PT5.9424315S",

"timestamp": "2023-11-10T05:22:16.483Z"

},

"messageCount": 2

}

GPT-4 Vision responds with detailed analysis of the fire scene.

Notice how it recognizes the Chicago Fire Department is on the scene, and how the fire is concentrated on the upper floors of the building.

That's useful, but since we want to emulate the role of an insurance adjuster, let's ask for more details on the severity of the fire.

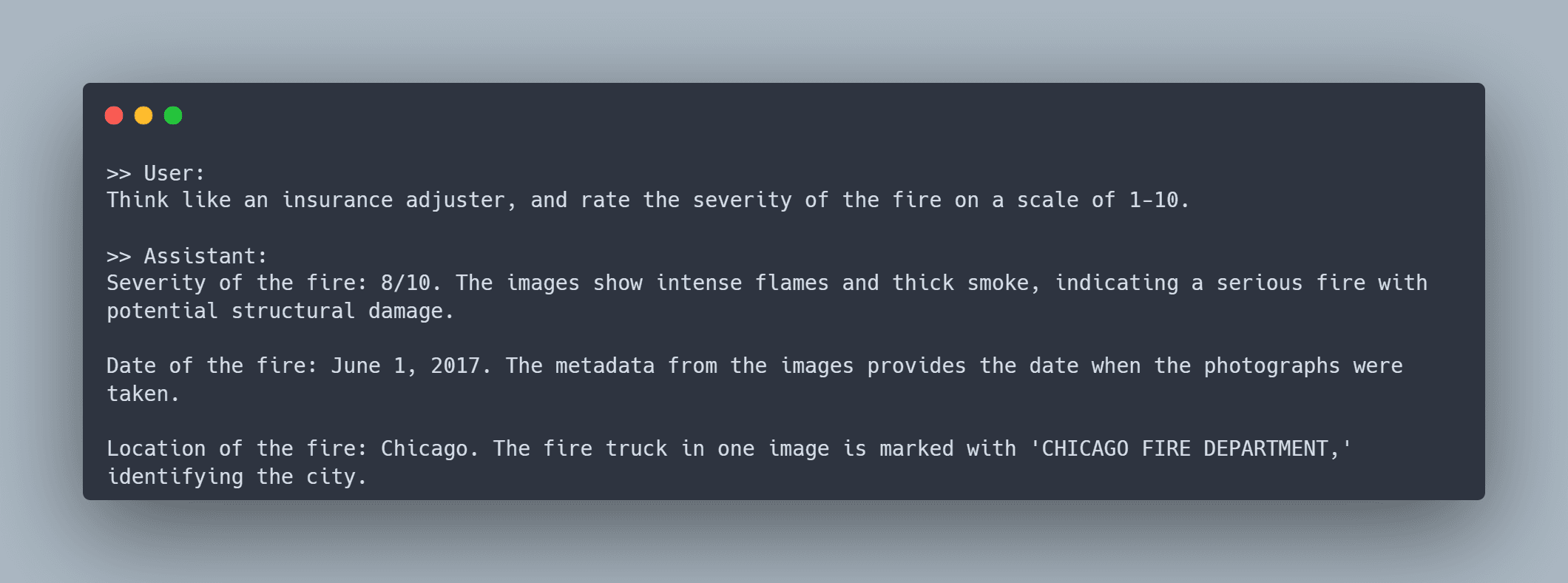

Think like an insurance adjuster, and rate the severity of the fire on a scale of 1-10.

// Mutation:

mutation PromptConversation($prompt: String!, $id: ID) {

promptConversation(prompt: $prompt, id: $id) {

conversation {

id

}

message {

role

author

message

tokens

completionTime

timestamp

}

messageCount

}

}

// Variables:

{

"prompt": "Think like an insurance adjuster, and rate the severity of the fire on a scale of 1-10.",

"id": "ca05660d-4de8-47fb-a7b4-13a28bd649cb"

}

// Response:

{

"conversation": {

"id": "ca05660d-4de8-47fb-a7b4-13a28bd649cb"

},

"message": {

"role": "ASSISTANT",

"message": "Severity of the fire: 8/10. The images show intense flames and thick smoke, indicating a serious fire with potential structural damage.\n\nDate of the fire: June 1, 2017. The metadata from the images provides the date when the photographs were taken.\n\nLocation of the fire: Chicago. The fire truck in one image is marked with 'CHICAGO FIRE DEPARTMENT,' identifying the city.",

"tokens": 123,

"completionTime": "PT4.2313014S",

"timestamp": "2023-11-10T05:33:03.48Z"

},

"messageCount": 4

}

Here we can see how GPT-4 Vision is doing deeper analysis, using the images as context, and leveraging the knowledge the model was trained on.

It rates the fire 8/10 because of the potential structural damage, and intensity of the flames and smoke.

It also recognizes the date of the fire, provided via image metadata by Graphlit, and understands the location of the fire based on the identification of Chicago Fire Department on the ladder.

Conversations can be leveraged to build AI-enabled chatbots or copilots. Conversations can also be integrated as an automated workflow for insurance companies to automatically understand any provided data for a claim.

Summary

We've shown you how to use a multimodal LLM, like OpenAI GPT-4 Vision, and the Graphlit Platform, to build an insights pipeline for use by insurance companies.

We look forward to hearing how you make use of Graphlit and our conversational knowledge graph in your applications.

If you have any questions, please reach out to us here.