Introduction

Have you found yourself juggling multiple tools alongside LlamaIndex in your project and realized that managing them has become more daunting than you anticipated? If so, you're not alone. In the rapidly evolving world of Retrieval Augmented Generation (RAG), maintaining an efficient and cohesive toolchain can be as crucial as the technology itself.

RAG is a powerful technique that combines the strengths of retrieval-based systems and language models to generate more accurate and contextually relevant responses. Two popular choices for developers looking to build RAG applications are LlamaIndex and Graphlit. In this post, we'll dive into a technical comparison of these frameworks across architecture, ease of use, the differences in their approaches for building RAG apps, and the challenges associated with each framework.

In this post, we'll dive into a technical comparison of these frameworks across architecture, ease of use, the differences in their approaches for building RAG apps, and the challenges associated with each framework.

Architecture Comparison

First let’s take a look at how these packages are designed and what a typical RAG architecture built with these tools look like.

LlamaIndex Key Components

LlamaIndex is an open-source Python library that provides a set of tools for constructing an index over data to enable efficient retrieval for question-answering using LLMs. LlamaIndex themselves don’t build any RAG infrastructure or any components of a RAG pipeline. Instead they provide abstractions that help you interface with data sources, vector databases and LLMs.

LlamaIndex has a few key components:

- Document Connectors: It supports loading data from various sources such as text files, PDFs, web pages, and more.

- Indexing: After the data has been ingested, LlamaIndex can organize and store that data into indexes. LlamaIndex supports several indexing structures with vector stores being the most popular. They also help you integrate with external vendors like Chroma and Qdrant among others.

- Query Engines: Once your data is ingested and indexed, you can query the index using natural language questions to retrieve relevant information from the indexed documents. When using external databases, LlamaIndex uses their retrieval strategies.

LLM Integration: LlamaIndex can also integrate with popular LLM APIs and local LLM servers. You can also customize the LLM parameters for generating responses. However, for a locally hosted model, LlamaIndex will only help you interface with it. It will not deploy the model itself. You can integrate an LLM with a query engine to augment the LLMs input with contextual data.

A Typical LlamaIndex Architecture

Now let’s see how those components work together with each other to build a RAG application.

LlamaIndex first fetches and ingests data from various sources like PDF files, webpages, databases and others. Their Document Connectors handles the extraction and processing of data from these sources.

Next, the document data is passed to the vector indexing component. This component utilizes embedding models to generate vector representations of the documents called embeddings. The embeddings capture the semantic meaning of the documents and enable efficient similarity-based retrieval. Embedding models like OpenAI’s Ada can be used to create these vector representations.

When a user asks a question, LlamaIndex retrieves the most relevant documents based on the vector similarity between the question and the indexed documents.

LlamaIndex integrates with LLM APIs like OpenAI’s GPT-3.5 to generate responses based on the retrieved document information. The retrieved documents are used to construct a prompt to provide relevant context for the LLM.

The LLM processes the prompt and generates a response. Finally, the generated response from the LLM is sent back as a response to the user's question.

Graphlit Key Components

Unlike LlamaIndex where you need to build your AI infrastructure, Graphlit provides an end-to-end platform with pre-integrated components. This simplifies the development of RAG applications allowing developers to focus on improving their app instead of building an AI infrastructure.

The key components of Graphlit are as follows:

- Data Ingestion: Graphlit supports ingesting content from various data sources such as PDFs, web pages, Slack, podcasts, emails, and GitHub issues. Feeds can also be set up for automated ingestion.

- Workflows: Content workflows process the ingested data and index it into the Graphlit knowledge graph. This can be queried later on to retrieve relevant context.

- Knowledge Graph: Graphlit’s most powerful feature is its knowledge graph that finds relationships between all the ingested data. Entities present in the data such as people, places, or organizations are extracted and linked to the source content via edges in the graph.

- Conversations: Conversations can be created over ingested content to build RAG apps. User prompts are processed, and relevant content is retrieved based on semantic search. The retrieved content, along with the user prompt, is formatted into an LLM prompt for generating a contextually relevant response.

- Specifications: Specifications are reusable LLM configurations that can be used in conversation.

A Typical Graphlit Architecture

These components work together to form a RAG architecture in Graphlit. The platform handles data ingestion, knowledge extraction, and storage in a knowledge graph. When a user interacts through conversations, Graphlit retrieves relevant content based on semantic search and entity rankings. The retrieved content is combined with the user prompt and sent to an LLM for generating a contextually relevant response. Specifications allow for customization of the conversation and prompt strategies.

Let's dive deeper into how these components work together:

At first, we create a workflow that specifies the steps to ingest data. This can include text extraction, audio transcription, PDF parsing and others.

Graphlit's data ingestion component can then be used to ingest content from various sources, such as PDFs, podcasts, web pages, github and so on. The processed content is then indexed in Graphlit's vector index.

As more content is ingested and processed, Graphlit builds a knowledge graph that captures the relationships between content, entities, and metadata. The knowledge graph forms a rich network of interconnected data, enabling powerful querying and retrieval capabilities.

When a user interacts with Graphlit through a conversation, the RAG process is triggered.

The user's prompt is analyzed, and relevant entities are extracted from it. Graphlit performs a semantic search on the ingested content, considering the extracted entities and the context of the prompt.

The LLM prompt, along with any specified conversation and prompt strategies, is sent to the chosen LLM for completion. The LLM generates a contextually relevant response based on the provided prompt and the retrieved content. The generated response is returned to the user as part of the conversation.

Comparing the two Architectures

Both Graphlit and LlamaIndex aim to facilitate the development of RAG applications that leverage LLMs and efficient retrieval of information from unstructured data using vector databases.

However, Graphlit provides a hosted end-to-end platform, while LlamaIndex offers a set of modular tools for interfacing with an AI infrastructure and integrating with LLMs.

Graphlit also emphasizes the creation of a knowledge graph that captures entity relationships and enables semantic search across all ingested data, whereas LlamaIndex's focuses on building efficient indexing and providing a query engine for retrieving relevant document chunks.

To understand the difference further, let’s see what it is like to build a RAG application using both these tools. We will build a RAG application to chat over a PDF.

Building a PDF Chatbot with LlamaIndex

Environment Setup

The first step is to install the LlamaIndex package. You can run the code below in a terminal or a jupyter notebook code cell.

!pip install llama_index -q

We will be using a GPT model from OpenAI to build our RAG application, so we need to set up our OpenAI API key. LlamaIndex also uses OpenAI’s Ada embedding model to create embeddings. Grab your OpenAI API key from platform.openai.com and add it as an environment variable.

import os

os.environ["OPENAI_API_KEY"] = "<your-api-key-here>"

Next, let’s test to make sure that LlamaIndex is able to query OpenAI and get a response using the API key we set up.

from llama_index.llms.openai import OpenAI

response = OpenAI().complete("What is a transformer model?")

print(response)

Finally, you will need to download the PDF you want to use and save it in a directory. For this example, I will be using the “Attention is all you need” paper by Vaswani et al. Here is the link to the pdf. I saved the pdf in a folder named “data”. In the next step we will see how we can load this PDF with LlamaIndex.

Loading Data with LlamaIndex

Now that you have your environment set up, we can start to build our RAG application. The first step is to load data. LlamaIndex provides many Data Connectors to load data from different sources like a local folder, websites, notion, MongoDB and others.

One way to load data is to use the SimpleDirectoryReader class that LlamaIndex provides. We can use it to load the pdf we saved to the “data” directory.

from llama_index.core import SimpleDirectoryReader

documents=SimpleDirectoryReader(“./data”).load_data()

This class loads all the files in a folder and creates a LlamaIndex document. With our files loaded, we need to index our data in the vector store.

LlamaIndex recently released LlamaParse, their hosted document analysis solution. We can use this to load our documents as well. To use LlamaParse, you will need to first create an account and create an API key. Next you will need to install the package

!pip install llama_parse

Finally, you can create a parser with your API key and load the pdf from your local directory

import nest_asyncio

nest_asyncio.apply()

from llama_parse import LlamaParse # pip install llama-parse

parser = LlamaParse(

api_key="<your-api-key>", # can also be set in your env as LLAMA_CLOUD_API_KEY

result_type="text" # "markdown" and "text" are available

)

# sync

documents = parser.load_data("./attention.pdf")

Creating a Vector Store and Indexing Data

Once the data is loaded, you need to create a vector store and index the data. We will be using Qdrant vector database. First you will have to create a Qdrant cloud account and create a vector db cluster. Once your cluster is ready, you can create your Qdrant API key.

Next we will need to install packages and set up our Qdrant client.

!pip install llama-index-vector-stores-qdrant

Create your Qdrant client as follows. Remember to enter your Qdrant cluster url and API key.

import qdrant_client

client = qdrant_client.QdrantClient(

url="<your-qdrant-url-here>",

api_key="<your-qdrant-api-key-here",

)

Next we can upload our document into the Qdrant vector database. We create a QdrantVectorStore and pass in our Qdrant client and a collection name.

We use the from_documents method of VectorStoreIndex to create embeddings of our PDF. We need to pass the documents we created and a StorageContext to the VectorStoreIndex.

vector_store = QdrantVectorStore(client=client, collection_name="my-collection")

storage_context = StorageContext.from_defaults(vector_store=vector_store)

index = VectorStoreIndex.from_documents(

documents,

storage_context=storage_context,

)

LlamaIndex uses a SentenceSplitter to chunk the documents into smaller pieces and then creates a VectorStoreIndex to store the indexed data.

Setting up Qdrant and LlamaParse adds complexity to our project. You now have to manage two different vendors and in case of Qdrant you also need to manage your vector database cluster. In a later section we will see how Graphlit manages all this vendor-wiring while you can focus on improving your RAG application

Retrieving Contextual Data and Querying

Now that your vector store is set up and your data has been indexed, you can query the index and retrieve contextual data using a RetrieverQueryEngine. You need to configure a retriever, response synthesizer, and postprocessor to assemble the query engine. Once your query engine is set up, you can invoke it with a question.

from llama_index.core import get_response_synthesizer

from llama_index.core.retrievers import VectorIndexRetriever

from llama_index.core.query_engine import RetrieverQueryEngine

from llama_index.core.postprocessor import SimilarityPostprocessor

retriever = VectorIndexRetriever(

index=index,

similarity_top_k=10,

)

# configure response synthesizer

response_synthesizer = get_response_synthesizer()

# assemble query engine

query_engine = RetrieverQueryEngine(

retriever=retriever,

response_synthesizer=response_synthesizer,

node_postprocessors=[SimilarityPostprocessor(similarity_cutoff=0.7)],

)

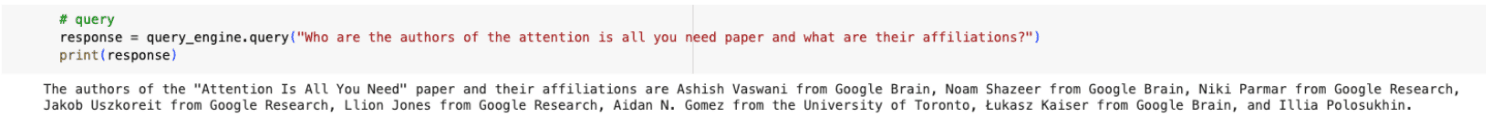

# query

response = query_engine.query("Who are the authors of the attention is all you need paper and what are their affiliations?")

print(response)

A query consists of three steps. The first step is to retrieve the most relevant document chunks for your query. You can select the top-k similar chunks you want to be retrieved. In this case, I have set it to 10.

Next, the retrieved chunks can be processed to filter out some chunks if their similarity cutoff is too low, if they don’t have certain keywords and so on. In my case, I have set a similarity_cutoff of 0.7. This means that any retrieved chunks with a similarity score of less than 0.7 will be filtered out.

Finally, the last step is called response_synthesis where the contextual data along with the query and any additional prompt is sent to the LLM to get a response.

And that’s it! You have built a RAG application to chat with a PDF using LlamaIndex. Now let’s look at how we build the same RAG application with Graphlit.

Building a PDF Chatbot with Graphlit

Environment Setup

With Graphlit, building a RAG application is significantly simpler. You first need to install the graphlit-client package.

!pip install graphlit-client -q

Next you need to initialize the Graphlit client with your organization ID, environment ID, and JWT secret. You will find these values in your project settings in the Graphlit portal. You can paste these values directly, or you can read them from environment variables.

from graphlit import Graphlit

graphlit = Graphlit(

organization_id="<your-org-id-here>",

environment_id="<your-env-id-here>",

jwt_secret="<your-jwt-secret-here>"

)

With our Graphlit client set up, we can proceed to ingest our PDF.

Creating a Workflow and Ingesting Data

To ingest data, you simply create a content workflow that details the content ingestion steps. Once a workflow is created we can specify a content ingestion feed to control how the content is ingested and processed into Graphlit.

Graphlit takes care of ingesting the data, putting it in the vector database, and finding connections between other data using its knowledge graph.

workflow_input = WorkflowInput(

name="Azure AI Document Intelligence",

preparation=PreparationWorkflowStageInput(

jobs=[

PreparationWorkflowJobInput(

connector=FilePreparationConnectorInput(

type=FilePreparationServiceTypes.AZURE_DOCUMENT_INTELLIGENCE,

azureDocument=AzureDocumentPreparationPropertiesInput(

model=AzureDocumentIntelligenceModels.LAYOUT

)

)

)

]

)

)

response = await graphlit.client.create_workflow(input)

workflow_id = response.create_workflow.id

Here we are using Azure’s Document Intelligence API to ingest this PDF. This can smartly ingest the PDF taking into account the document’s layout including titles, paragraphs and tables. With our workflow created, we can now ingest our PDF.

Unlike LlamaIndex where we had to download the document to ingest it, Graphlit can ingest the PDF from a URL or cloud storage. In the code below, I can ingest the “Attention is all you need” paper directly from its Arxiv URL using the ingest_uri method. I am also passing the id of the ingestion workflow I created.

uri='https://arxiv.org/pdf/1706.03762'

response = await graphlit.client.ingest_uri(uri, is_synchronous=True,

workflow=EntityReferenceInput(id=workflow_id))

file_content_id = response.ingest_uri.id

Creating an LLM Specification

Next, we need to create an LLM specification that defines the model, retrieval strategy, and prompt strategy. A specification is an easy way to save an LLM configuration, so it can be reused across multiple conversations in an application. This ensures that your app behaves consistently across all users.

In the code below I am creating a specification for OpenAI’s GPT4-turbo model with settings like temperature, probability and the max completion tokens. Graphlit provides a user-friendly way to configure these settings.

promptStrategy determines how the user’s prompt will be formatted. Here I chose the OPTIMIZE_SEARCH strategy which will convert the prompt to keywords to optimize for semantic search performance.

The retrievalStrategy determines how Graphlit will retrieve relevant context. Here I have set the strategy to SECTION which will return segments of the paper. This strategy is better than naive chunking that LlamaIndex provides. Moreover, unlike LlamaIndex where the chunking cannot be changed after you index your data, with Graphlit, you can choose other retrieval strategies like CHUNK or CONTENT.

spec_input = SpecificationInput(

name="Completion",

type=SpecificationTypes.COMPLETION,

serviceType=ModelServiceTypes.OPEN_AI,

searchType=SearchTypes.VECTOR,

openAI=OpenAIModelPropertiesInput(

model=OpenAIModels.GPT4_TURBO_128K,

temperature=0.1,

probability=0.2,

completionTokenLimit=2048,

),

promptStrategy=PromptStrategyInput(

type=PromptStrategyTypes.OPTIMIZE_SEARCH

),

retrievalStrategy=RetrievalStrategyInput(

type=RetrievalStrategyTypes.SECTION,

contentLimit=10

)

)

response = await graphlit.client.create_specification(input)

spec_id = response.create_specification.id

Creating a Conversation and Prompting

Now that we have ingested our data and created our LLM specification, we can start chatting with our PDF! To start querying, we need to create a Graphlit conversation with our LLM specification. A Conversation in Graphlit is an easy way to manage and group your queries.

In the code below you can see that I have created a conversation with the LLM specification I created in the last step. I also added a filter for the content we ingested. Without this filter, graphlit will search across all the ingested data in your project.

conv_input = ConversationInput(

name="Conversation",

specification=EntityReferenceInput(

id=spec_id

),

filter=ContentCriteriaInput(

workflows=[EntityReferenceInput(

id=workflow_id

)]

)

)

response = await graphlit.client.create_conversation(input)

conversation_id = response.create_conversation.id

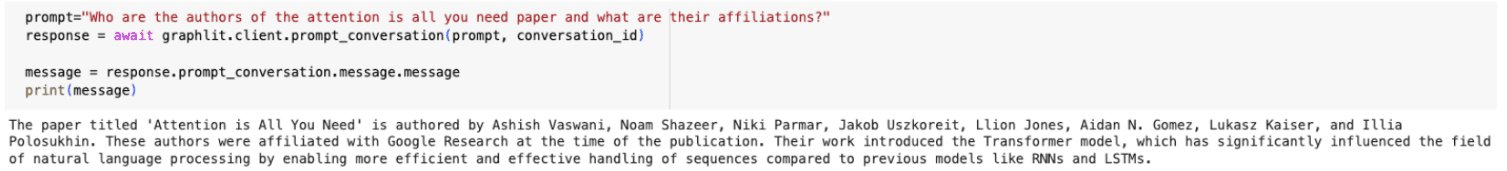

Finally, we can prompt our conversation with the user’s question using the prompt_conversation method and get the response from the LLM. Under the hood, Graphlit is adding our prompt to our conversation. It is also using the specification we used to create the conversation to retrieve relevant context from our vector database. It is then passing the prompt and the context data to OpenAI’s GPT4-turbo model and fetching the response.

prompt= "Who are the authors of the attention is all you need paper and what are their affiliations?"

response= await graphlit.client.prompt_conversation(prompt, conversation_id,)

message=response.prompt_conversation.message.message

print(message)

Well done! You have now created a RAG application using Graphlit. So while Graphlit handles the complex tasks of ingesting data, indexing it to a vector database, fetching relevant contexts and querying LLMs, you can focus on configuring the steps in the pipeline and improving the performance of your RAG application. In the next section, let’s see how the two compare.

Comparing LlamaIndex and Graphlit

LlamaIndex requires you to set up and manage your own vector database cluster. This adds complexity to the development process and requires more effort to maintain. On the other hand, Graphlit simplifies this process by automatically ingesting data from the specified feed, handles chunking, and stores it in the vector database. You only need to create a workflow and specify a feed. Graphlit can also incorporate multiple data sources, and extracts knowledge graph entities and relationships between your data automatically.

Graphlit is also fully hosted, eliminating the need for developers to manage infrastructure and vector databases. With LlamaIndex, you need to host your own vector database and LLM models (unless you are using an LLM API). This hosted nature reduces the operational overhead associated with maintaining an AI infrastructure. With Graphlit, you can focus on developing your RAG application logic while the platform takes care of everything else.

Graphlit provides built-in support for handling conversations in RAG applications by default.

It offers conversation management capabilities, allowing you to maintain context and provide coherent responses across multiple user interactions. With LlamaIndex, you would need to write more code and manage databases to add conversation handling mechanisms. This will add complexity to your application.

Graphlit's feed-based approach enables automatic updates of data in the vector database.

As new data becomes available in the specified feed, Graphlit seamlessly ingests and indexes it, keeping your vector database up to date.

With LlamaIndex, you would need to set up an infrastructure to update and re-index the data whenever changes occur, which can be time-consuming and error-prone.

Finally, Graphlit allows you to easily change and experiment with different language models to suit your application's requirements.

You can switch between models, adjust parameters, and fine-tune the behavior of your RAG application with minimal configuration changes.

Graphlit also enables you to customize and extend workflows by adding additional steps or integrating with external services, providing flexibility in building complex RAG applications. On the other hand, making these changes is more difficult in LlamaIndex.

When Do You Choose Graphlit over LlamaIndex?

Both LlamaIndex and Graphlit are powerful frameworks for building RAG applications. However, Graphlit offers a more streamlined and user-friendly approach. With its hosted solution, automatic data ingestion, and seamless integration with multiple LLMs, Graphlit simplifies the development process and allows developers to focus on building intelligent chatbots without the overhead of managing infrastructure and complex configurations.

Let's look at the reasons why you might choose Graphlit over LlamaIndex:

Here are some situations where Graphlit truly shines:

- Leveraging Multiple Data Sources: Graphlit allows you to easily integrate multiple data sources into your RAG application. By specifying different feeds, you can ingest data from various sources, such as podcasts, github, web pages, cloud storage, and Graphlit will automatically establish connections between the data points. This enables you to build comprehensive knowledge bases and provide contextually relevant responses.

- Rapid Prototyping: When you need to quickly prototype and iterate on RAG applications, Graphlit's simplicity and minimal setup requirements make it an ideal choice. You can focus on developing the core functionality of your application without getting bogged down by infrastructure setup and configuration. Graphlit has integrations with the most popular and powerful LLMs available today. This means that developers can leverage the capabilities of these LLMs without the need to worry about the complexities of deploying the models themselves or creating custom integrations with different LLM APIs.

- Integration with Existing Systems: Graphlit can be seamlessly integrated with existing data sources and workflows. Whether you have a pre-existing database, a content management system, or a custom application, Graphlit can ingest data from these sources and augment them with RAG capabilities. This allows you to enhance your existing systems with intelligent retrieval and generation features.

- Iterative Improvement: Graphlit's flexible architecture allows for iterative improvement of RAG applications. As you gather user feedback and insights, you can easily update and refine your data sources, retrieval strategies, and prompt strategies without significant overhead. Graphlit's intuitive interface and configuration options make it easy to experiment and optimize your application over time.

- Small Teams with Minimal Infrastructure Experience: Graphlit's hosted solution eliminates the need for managing infrastructure and vector databases, making it ideal for deploying RAG applications when you have a small team with no time or experience in building AI Infrastructure. Graphlit provides RAG-as-a-Service, so your team can focus on building your application logic while Graphlit takes care of the underlying infrastructure, ensuring scalability and reliability.

In summary, Graphlit shines in situations where simplicity, ease of use, and a hosted solution is a priority. Its automatic data ingestion, seamless data integration capabilities, pre-integrated vector and graph databases, and integrations with all the leading LLMs make it an ideal choice for developers and organizations looking to harness the power of retrieval augmented generation without the complexities of building their own AI Infrastructure.

References:

https://www.gettingstarted.ai/llamaindex-data-connectors-create-custom-chatgpt-using-own-documents/

https://lmy.medium.com/comparing-langchain-and-llamaindex-with-4-tasks-2970140edf33

Appendix:

Creating a Vector Store and Indexing Data without a Vector Database

Once the data is loaded, you need to create a vector store and index the data. LlamaIndex uses a SentenceSplitter to chunk the documents into smaller pieces and then creates a VectorStoreIndex to store the indexed data.

from llama_index.core.node_parser import SentenceSplitter

from llama_index.core import VectorStoreIndex

text_splitter = SentenceSplitter(chunk_size=512, chunk_overlap=10)

index = VectorStoreIndex.from_documents(

documents, transformations=[text_splitter]

)

You will need to pass the chunk_size and the chunk_overlap values to the SentenceSplitter class. This will determine how the text in the PDF is chunked when uploading to the vector database. Changing these parameters will affect your retrieval accuracy.

We use the from_documents method of the VectorStoreIndex class to create embeddings of the chunks of our documents. LlamaIndex uses the text-embedding-ada-002 embedding model from OpenAI to create the embeddings.

To persist the vector data, you need to configure a vector database like Chroma or Pinecone. This adds complexity to the setup process and requires additional configuration so we will not be doing it in this blog.

Summary

Please email any questions on this article or the Graphlit Platform to questions@graphlit.com.

For more information, you can read our Graphlit Documentation, visit our marketing site, or join our Discord community.